The sophisticated speech recognition software that powers today’s digital assistants like Apple’s Siri certainly seems like a modern, cutting-edge invention, but it may surprise you to learn that this technology isn’t as recent as you might think. Believe it or not, the origins of speech or voice recognition technology actually stretch back more than a century: long before computers, let alone smartphones, came on the scene, people were already dreaming of different ways to talk to machines. Read on for a look back at this remarkable history of technological development.

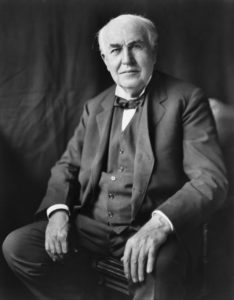

Thomas Edison was experimenting with dictation technology in the late 1800s.

It’s hardly surprising that the prolific and imaginative inventor Thomas Edison was one of the first people to experiment with early forms of speech recognition technology. In 1877, Edison invented the phonograph, the first device that was capable of reproducing sound. Just two years later, in 1879, he created a dictation machine called the “Ediphone” that was capable of recording speech onto wax cylinders; the competing system for voice dictation at this time was the Dictaphone. While neither of these inventions can technically be considered true speech recognition devices, the recording and reproduction capabilities they demonstrated nevertheless laid a vital foundation for future experiments with this technology.

It’s hardly surprising that the prolific and imaginative inventor Thomas Edison was one of the first people to experiment with early forms of speech recognition technology. In 1877, Edison invented the phonograph, the first device that was capable of reproducing sound. Just two years later, in 1879, he created a dictation machine called the “Ediphone” that was capable of recording speech onto wax cylinders; the competing system for voice dictation at this time was the Dictaphone. While neither of these inventions can technically be considered true speech recognition devices, the recording and reproduction capabilities they demonstrated nevertheless laid a vital foundation for future experiments with this technology.

However, it wasn’t until the 1950s that advances were made in genuine speech recognition.

The first real breakthrough in genuine voice recognition came in 1952, during the dawn of the computer age. At this time, Bell Laboratories debuted “Audrey,” a machine that could understand spoken digits between zero and nine—as you might guess, the idea behind Audrey was to eventually allow people to dial the phone through voice commands. Although Audrey boasted an impressive 90% accuracy rate, it turned out that this was only the case when the speech commands were issued by the machine’s inventor. When other people spoke to Audrey, the accuracy rate dropped significantly.

In the example of Audrey, we see one of the biggest challenges that speech recognition technology has struggled with: the fact that each person’s voice is unique and that spoken language isn’t always standard, with accents, dialects, speed, inflection, and emphasis all differing across individuals and populations.

IBM joined the speech recognition game in 1962.

At the 1962 World’s Fair in Seattle, IBM demonstrated its experimental machine known as “Shoebox.” More advanced than Audrey, Shoebox was able to perform arithmetic in response to voice commands: it could recognize 16 spoken words, including the commands “plus,” “minus,” “total,” and the digits between zero and nine. Voice commands were issued to Shoebox by speaking into a microphone; the attached adding machine then performed the requested calculations and printed the answers.

At the 1962 World’s Fair in Seattle, IBM demonstrated its experimental machine known as “Shoebox.” More advanced than Audrey, Shoebox was able to perform arithmetic in response to voice commands: it could recognize 16 spoken words, including the commands “plus,” “minus,” “total,” and the digits between zero and nine. Voice commands were issued to Shoebox by speaking into a microphone; the attached adding machine then performed the requested calculations and printed the answers.

Important advances occurred during the 1970s.

During the 1970s, the US Department of Defense made important contributions to the development of speech recognition technology with the DARPA SUR (Speech Understanding Recognition) program it funded between 1971 and 1976. Under this program, researchers at Carnegie Mellon University created the speech-understanding system known as “Harpy.”

While Harpy boasted a much larger vocabulary than either of its predecessors described above—Harpy was able to recognize more than 1,000 words, equivalent to the vocabulary of an average three-year-old child—the more important breakthrough had to do with how the words were recognized. Rather than identifying words in isolation, Harpy used the more efficient search approach of examining whole sentences. This is something the human brain does naturally, as it allows us to fill in any words we don’t quite hear based on what makes the most sense in the context of the sentence.

New models made headway in the 1980s.

In the 1980s, the limitations of computer processing power still proved to be a difficult obstacle for voice recognition systems to overcome. At this time, many systems could recognize thousands of words, but only if those words were spoken very slowly and enunciated clearly. However, this decade brought a breakthrough that would prove monumental for the future development of the technology: rather than relying on a stored database of fixed templates to match words to, 1980s-era speech recognition systems like IBM’s Tangora used hidden Markov models for word identification.

These models essentially leverage statistics, data clustering, and pattern analysis to predict the likelihood of a particular word originating from an unknown sound. If this sounds somewhat familiar, it’s because the predictive text function on your smartphone is a contemporary example of Markov models in action. The use of Markov models proved extremely successful: after 20 minutes of “training,” Tangora was able to recognize up to 20,000 words, as well as some full sentences.

The first consumer speech recognition product hit the market in the 1990s.

In 1997, voice recognition technology took a huge step forward with the release of Dragon NaturallySpeaking, one of the first consumer speech recognition products. An earlier version, DragonDictate, had been released in 1990, but Dragon NaturallySpeaking was a huge improvement. With Dragon NaturallySpeaking software, it was possible to speak at a normal speed with no pauses between words, and the system was able to recognize up to 100 words a minute. An upgraded form of this software is still in use today by doctors and other professionals whose work requires significant dictation and notation.